- Published on

3 tier architecture for a containerized application using Docker or Kubernetes

- Authors

- Name

- Chengchang Yu

- @chengchangyu

📋 Solution Overview

This is a production-ready, fully automated 3-tier containerized application with:

- ✅ Infrastructure as Code (Terraform)

- ✅ GitLab CI/CD with manual approval gates

- ✅ Auto-scaling & High Availability

- ✅ Automated vulnerability scanning

- ✅ Multi-account deployment (dev/test/prod)

- ✅ Cross-region replication

- ✅ Comprehensive monitoring & logging

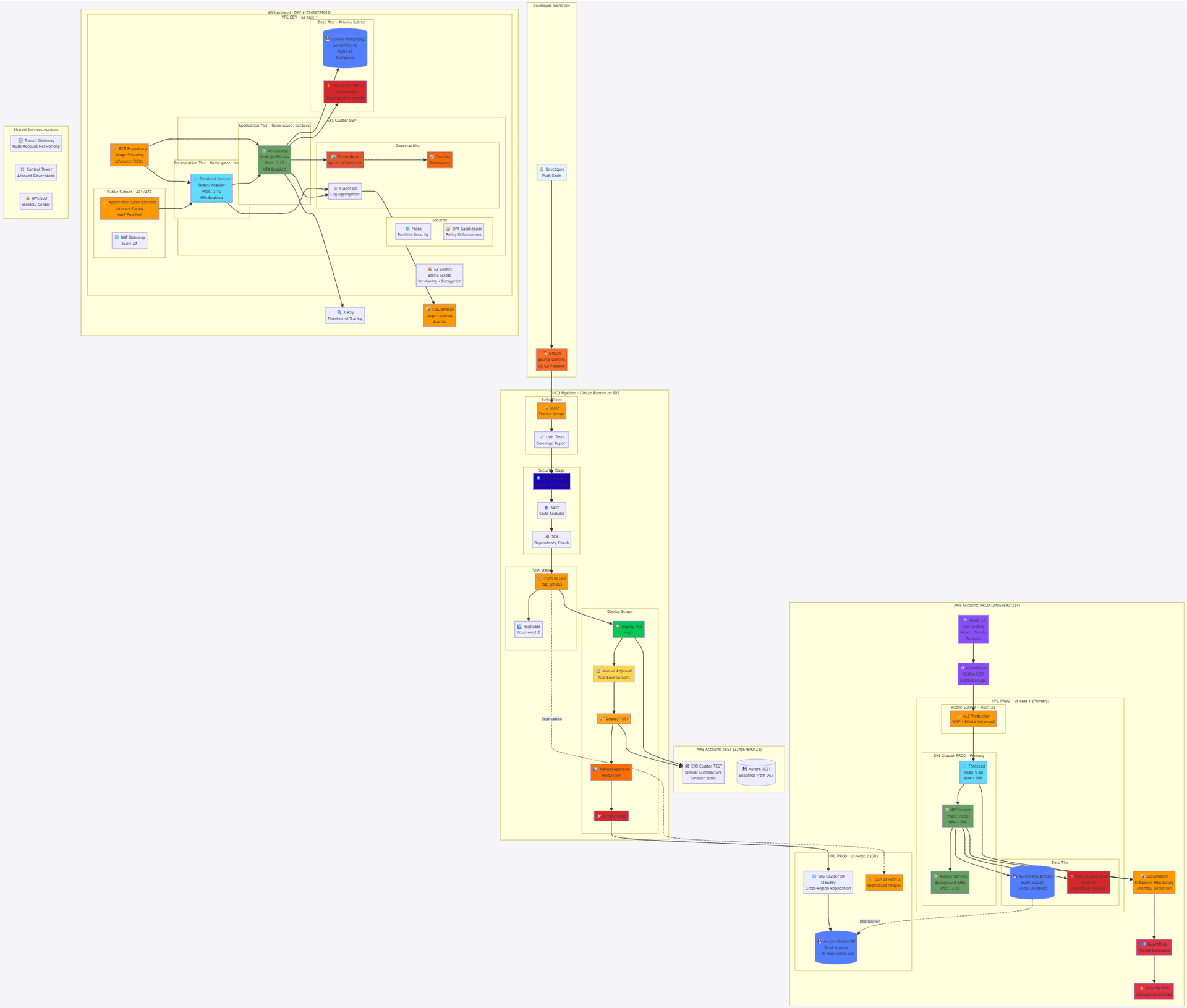

🏗️ Architecture Diagram

AWS 3-Tier Containerized Application Architecture (generated via Claude Sonnet 4.5)

📁 Repository Structure

aws-3tier-containerized-app/

├── README.md # This documentation

├── ARCHITECTURE.md # Detailed architecture decisions

├── .gitlab-ci.yml # GitLab CI/CD pipeline

│

├── terraform/ # Infrastructure as Code

│ ├── modules/

│ │ ├── networking/ # VPC, Subnets, Security Groups

│ │ │ ├── main.tf

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ ├── eks/ # EKS Cluster, Node Groups

│ │ │ ├── main.tf

│ │ │ ├── helm.tf # Prometheus, Grafana, Fluent Bit

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ ├── rds/ # Aurora PostgreSQL

│ │ │ ├── main.tf

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ ├── elasticache/ # Redis Cluster

│ │ │ ├── main.tf

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ ├── ecr/ # Container Registry

│ │ │ ├── main.tf

│ │ │ ├── replication.tf # Cross-region replication

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ ├── alb/ # Application Load Balancer

│ │ │ ├── main.tf

│ │ │ ├── waf.tf # WAF rules

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ ├── monitoring/ # CloudWatch, X-Ray

│ │ │ ├── main.tf

│ │ │ ├── alarms.tf

│ │ │ ├── variables.tf

│ │ │ └── outputs.tf

│ │ └── security/ # IAM, Security Groups, KMS

│ │ ├── main.tf

│ │ ├── iam_roles.tf

│ │ ├── kms.tf

│ │ ├── variables.tf

│ │ └── outputs.tf

│ │

│ ├── environments/

│ │ ├── dev/

│ │ │ ├── main.tf

│ │ │ ├── backend.tf # S3 backend config

│ │ │ ├── terraform.tfvars

│ │ │ └── outputs.tf

│ │ ├── test/

│ │ │ ├── main.tf

│ │ │ ├── backend.tf

│ │ │ ├── terraform.tfvars

│ │ │ └── outputs.tf

│ │ └── prod/

│ │ ├── main.tf

│ │ ├── backend.tf

│ │ ├── terraform.tfvars

│ │ ├── dr.tf # DR region resources

│ │ └── outputs.tf

│ │

│ └── scripts/

│ ├── init-backend.sh # Initialize Terraform backend

│ └── deploy.sh # Deployment wrapper script

│

├── kubernetes/ # K8s Manifests

│ ├── base/ # Kustomize base

│ │ ├── kustomization.yaml

│ │ ├── namespace.yaml

│ │ ├── frontend/

│ │ │ ├── deployment.yaml

│ │ │ ├── service.yaml

│ │ │ ├── hpa.yaml # Horizontal Pod Autoscaler

│ │ │ ├── pdb.yaml # Pod Disruption Budget

│ │ │ └── ingress.yaml

│ │ ├── backend/

│ │ │ ├── deployment.yaml

│ │ │ ├── service.yaml

│ │ │ ├── hpa.yaml

│ │ │ ├── pdb.yaml

│ │ │ ├── configmap.yaml

│ │ │ └── secret.yaml # Sealed Secrets

│ │ └── worker/

│ │ ├── deployment.yaml

│ │ ├── hpa.yaml

│ │ └── pdb.yaml

│ │

│ ├── overlays/

│ │ ├── dev/

│ │ │ ├── kustomization.yaml

│ │ │ └── patches/

│ │ ├── test/

│ │ │ ├── kustomization.yaml

│ │ │ └── patches/

│ │ └── prod/

│ │ ├── kustomization.yaml

│ │ ├── patches/

│ │ └── dr/ # DR-specific configs

│ │

│ └── helm-charts/ # Custom Helm charts

│ └── app/

│ ├── Chart.yaml

│ ├── values.yaml

│ ├── values-dev.yaml

│ ├── values-test.yaml

│ ├── values-prod.yaml

│ └── templates/

│

├── app/ # Application Code

│ ├── frontend/

│ │ ├── Dockerfile

│ │ ├── .dockerignore

│ │ ├── nginx.conf

│ │ ├── src/

│ │ ├── package.json

│ │ └── tests/

│ │

│ ├── backend/

│ │ ├── Dockerfile

│ │ ├── .dockerignore

│ │ ├── src/

│ │ ├── requirements.txt # or package.json

│ │ ├── tests/

│ │ └── migrations/ # DB migrations

│ │

│ └── worker/

│ ├── Dockerfile

│ ├── src/

│ └── tests/

│

├── scripts/

│ ├── build.sh # Build Docker images

│ ├── scan.sh # Run Trivy scan

│ ├── deploy-k8s.sh # Deploy to K8s

│ └── rollback.sh # Rollback deployment

│

├── monitoring/

│ ├── prometheus/

│ │ ├── values.yaml

│ │ └── alerts.yaml

│ ├── grafana/

│ │ ├── values.yaml

│ │ └── dashboards/

│ │ ├── cluster-overview.json

│ │ ├── application-metrics.json

│ │ └── database-metrics.json

│ └── fluent-bit/

│ └── values.yaml

│

├── security/

│ ├── trivy-config.yaml

│ ├── opa-policies/ # OPA Gatekeeper policies

│ │ ├── require-labels.yaml

│ │ ├── require-resources.yaml

│ │ └── block-privileged.yaml

│ └── network-policies/

│ ├── frontend-policy.yaml

│ ├── backend-policy.yaml

│ └── deny-all-default.yaml

│

└── docs/

├── DEPLOYMENT.md # Deployment guide

├── TROUBLESHOOTING.md # Common issues

├── SECURITY.md # Security best practices

├── MONITORING.md # Monitoring guide

└── DR-RUNBOOK.md # Disaster recovery procedures

🔧 Key Implementation Files

1. .gitlab-ci.yml - CI/CD Pipeline

# .gitlab-ci.yml

variables:

AWS_DEFAULT_REGION: us-east-1

ECR_REGISTRY: ${AWS_ACCOUNT_ID}.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com

IMAGE_TAG: ${CI_COMMIT_SHORT_SHA}

TRIVY_VERSION: "0.48.0"

TERRAFORM_VERSION: "1.6.0"

stages:

- validate

- build

- security

- push

- deploy-dev

- deploy-test

- deploy-prod

# Validate code quality

lint:

stage: validate

image: node:18-alpine

script:

- cd app/frontend && npm install && npm run lint

- cd ../backend && pip install -r requirements.txt && pylint src/

only:

- merge_requests

- main

# Build Docker images

build-frontend:

stage: build

image: docker:24-dind

services:

- docker:24-dind

script:

- cd app/frontend

- docker build -t ${ECR_REGISTRY}/frontend:${IMAGE_TAG} .

- docker save ${ECR_REGISTRY}/frontend:${IMAGE_TAG} -o frontend.tar

artifacts:

paths:

- app/frontend/frontend.tar

expire_in: 1 hour

build-backend:

stage: build

image: docker:24-dind

services:

- docker:24-dind

script:

- cd app/backend

- docker build -t ${ECR_REGISTRY}/backend:${IMAGE_TAG} .

- docker save ${ECR_REGISTRY}/backend:${IMAGE_TAG} -o backend.tar

artifacts:

paths:

- app/backend/backend.tar

expire_in: 1 hour

# Security scanning

trivy-scan-frontend:

stage: security

image: aquasec/trivy:${TRIVY_VERSION}

dependencies:

- build-frontend

script:

- docker load -i app/frontend/frontend.tar

- trivy image --severity HIGH,CRITICAL --exit-code 1 ${ECR_REGISTRY}/frontend:${IMAGE_TAG}

allow_failure: false

trivy-scan-backend:

stage: security

image: aquasec/trivy:${TRIVY_VERSION}

dependencies:

- build-backend

script:

- docker load -i app/backend/backend.tar

- trivy image --severity HIGH,CRITICAL --exit-code 1 ${ECR_REGISTRY}/backend:${IMAGE_TAG}

allow_failure: false

sast:

stage: security

image: returntocorp/semgrep:latest

script:

- semgrep --config=auto app/ --json -o sast-report.json

artifacts:

reports:

sast: sast-report.json

# Push to ECR

push-images:

stage: push

image: amazon/aws-cli:latest

dependencies:

- build-frontend

- build-backend

before_script:

- aws ecr get-login-password --region ${AWS_DEFAULT_REGION} | docker login --username AWS --password-stdin ${ECR_REGISTRY}

script:

- docker load -i app/frontend/frontend.tar

- docker load -i app/backend/backend.tar

- docker push ${ECR_REGISTRY}/frontend:${IMAGE_TAG}

- docker push ${ECR_REGISTRY}/backend:${IMAGE_TAG}

- docker tag ${ECR_REGISTRY}/frontend:${IMAGE_TAG} ${ECR_REGISTRY}/frontend:latest

- docker tag ${ECR_REGISTRY}/backend:${IMAGE_TAG} ${ECR_REGISTRY}/backend:latest

- docker push ${ECR_REGISTRY}/frontend:latest

- docker push ${ECR_REGISTRY}/backend:latest

only:

- main

# Deploy to DEV (automatic)

deploy-dev:

stage: deploy-dev

image: hashicorp/terraform:${TERRAFORM_VERSION}

environment:

name: dev

url: https://dev.example.com

before_script:

- apk add --no-cache aws-cli kubectl

- aws eks update-kubeconfig --region ${AWS_DEFAULT_REGION} --name eks-dev

script:

- cd kubernetes/overlays/dev

- kustomize edit set image frontend=${ECR_REGISTRY}/frontend:${IMAGE_TAG}

- kustomize edit set image backend=${ECR_REGISTRY}/backend:${IMAGE_TAG}

- kubectl apply -k .

- kubectl rollout status deployment/frontend -n frontend --timeout=5m

- kubectl rollout status deployment/backend -n backend --timeout=5m

only:

- main

# Deploy to TEST (manual approval)

approve-test:

stage: deploy-test

script:

- echo "Awaiting manual approval for TEST deployment"

when: manual

only:

- main

deploy-test:

stage: deploy-test

image: hashicorp/terraform:${TERRAFORM_VERSION}

environment:

name: test

url: https://test.example.com

dependencies:

- approve-test

before_script:

- apk add --no-cache aws-cli kubectl

- aws eks update-kubeconfig --region ${AWS_DEFAULT_REGION} --name eks-test --role-arn arn:aws:iam::${TEST_ACCOUNT_ID}:role/GitLabRunner

script:

- cd kubernetes/overlays/test

- kustomize edit set image frontend=${ECR_REGISTRY}/frontend:${IMAGE_TAG}

- kustomize edit set image backend=${ECR_REGISTRY}/backend:${IMAGE_TAG}

- kubectl apply -k .

- kubectl rollout status deployment/frontend -n frontend --timeout=5m

- kubectl rollout status deployment/backend -n backend --timeout=5m

only:

- main

# Deploy to PROD (manual approval + blue/green)

approve-prod:

stage: deploy-prod

script:

- echo "Awaiting manual approval for PRODUCTION deployment"

when: manual

only:

- main

deploy-prod:

stage: deploy-prod

image: hashicorp/terraform:${TERRAFORM_VERSION}

environment:

name: production

url: https://app.example.com

dependencies:

- approve-prod

before_script:

- apk add --no-cache aws-cli kubectl

- aws eks update-kubeconfig --region ${AWS_DEFAULT_REGION} --name eks-prod --role-arn arn:aws:iam::${PROD_ACCOUNT_ID}:role/GitLabRunner

script:

- cd kubernetes/overlays/prod

- kustomize edit set image frontend=${ECR_REGISTRY}/frontend:${IMAGE_TAG}

- kustomize edit set image backend=${ECR_REGISTRY}/backend:${IMAGE_TAG}

# Blue/Green deployment

- kubectl apply -k . --dry-run=client

- kubectl apply -k .

- kubectl rollout status deployment/frontend -n frontend --timeout=10m

- kubectl rollout status deployment/backend -n backend --timeout=10m

# Health check

- ./scripts/health-check.sh

only:

- main

# Replicate to DR region

replicate-dr:

stage: deploy-prod

image: amazon/aws-cli:latest

dependencies:

- deploy-prod

script:

- aws ecr replicate-image --source-region us-east-1 --destination-region us-west-2 --repository-name frontend --image-tag ${IMAGE_TAG}

- aws ecr replicate-image --source-region us-east-1 --destination-region us-west-2 --repository-name backend --image-tag ${IMAGE_TAG}

only:

- main

2. Terraform - EKS Module (terraform/modules/eks/main.tf)

# terraform/modules/eks/main.tf

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

locals {

cluster_name = "${var.environment}-eks-cluster"

tags = merge(

var.tags,

{

Environment = var.environment

ManagedBy = "Terraform"

Project = "3-tier-app"

}

)

}

# EKS Cluster

resource "aws_eks_cluster" "main" {

name = local.cluster_name

role_arn = aws_iam_role.eks_cluster.arn

version = var.kubernetes_version

vpc_config {

subnet_ids = var.private_subnet_ids

endpoint_private_access = true

endpoint_public_access = var.environment == "prod" ? false : true

public_access_cidrs = var.environment == "prod" ? [] : ["0.0.0.0/0"]

security_group_ids = [aws_security_group.eks_cluster.id]

}

encryption_config {

provider {

key_arn = aws_kms_key.eks.arn

}

resources = ["secrets"]

}

enabled_cluster_log_types = [

"api",

"audit",

"authenticator",

"controllerManager",

"scheduler"

]

depends_on = [

aws_iam_role_policy_attachment.eks_cluster_policy,

aws_cloudwatch_log_group.eks

]

tags = local.tags

}

# CloudWatch Log Group

resource "aws_cloudwatch_log_group" "eks" {

name = "/aws/eks/${local.cluster_name}/cluster"

retention_in_days = var.environment == "prod" ? 90 : 30

kms_key_id = aws_kms_key.eks.arn

tags = local.tags

}

# KMS Key for EKS encryption

resource "aws_kms_key" "eks" {

description = "EKS Secret Encryption Key for ${var.environment}"

deletion_window_in_days = var.environment == "prod" ? 30 : 7

enable_key_rotation = true

tags = local.tags

}

resource "aws_kms_alias" "eks" {

name = "alias/eks-${var.environment}"

target_key_id = aws_kms_key.eks.key_id

}

# EKS Node Groups

resource "aws_eks_node_group" "main" {

cluster_name = aws_eks_cluster.main.name

node_group_name = "${var.environment}-node-group"

node_role_arn = aws_iam_role.eks_node.arn

subnet_ids = var.private_subnet_ids

scaling_config {

desired_size = var.node_desired_size

max_size = var.node_max_size

min_size = var.node_min_size

}

update_config {

max_unavailable_percentage = 33

}

instance_types = var.node_instance_types

capacity_type = var.environment == "prod" ? "ON_DEMAND" : "SPOT"

disk_size = 50

labels = {

Environment = var.environment

NodeGroup = "main"

}

tags = merge(

local.tags,

{

"k8s.io/cluster-autoscaler/${local.cluster_name}" = "owned"

"k8s.io/cluster-autoscaler/enabled" = "true"

}

)

depends_on = [

aws_iam_role_policy_attachment.eks_node_policy,

aws_iam_role_policy_attachment.eks_cni_policy,

aws_iam_role_policy_attachment.eks_container_registry_policy,

]

}

# IAM Role for EKS Cluster

resource "aws_iam_role" "eks_cluster" {

name = "${var.environment}-eks-cluster-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "eks.amazonaws.com"

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy_attachment" "eks_cluster_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks_cluster.name

}

# IAM Role for EKS Nodes

resource "aws_iam_role" "eks_node" {

name = "${var.environment}-eks-node-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy_attachment" "eks_node_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.eks_node.name

}

resource "aws_iam_role_policy_attachment" "eks_cni_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.eks_node.name

}

resource "aws_iam_role_policy_attachment" "eks_container_registry_policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.eks_node.name

}

# Additional IAM policy for CloudWatch and X-Ray

resource "aws_iam_role_policy" "eks_node_additional" {

name = "${var.environment}-eks-node-additional-policy"

role = aws_iam_role.eks_node.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"cloudwatch:PutMetricData",

"ec2:DescribeVolumes",

"ec2:DescribeTags",

"logs:PutLogEvents",

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:DescribeLogGroups",

"logs:DescribeLogStreams"

]

Resource = "*"

},

{

Effect = "Allow"

Action = [

"xray:PutTraceSegments",

"xray:PutTelemetryRecords"

]

Resource = "*"

}

]

})

}

# Security Group for EKS Cluster

resource "aws_security_group" "eks_cluster" {

name_prefix = "${var.environment}-eks-cluster-sg"

description = "Security group for EKS cluster control plane"

vpc_id = var.vpc_id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

description = "Allow all outbound traffic"

}

tags = merge(

local.tags,

{

Name = "${var.environment}-eks-cluster-sg"

}

)

}

resource "aws_security_group_rule" "cluster_ingress_workstation_https" {

description = "Allow workstation to communicate with the cluster API Server"

type = "ingress"

from_port = 443

to_port = 443

protocol = "tcp"

cidr_blocks = var.allowed_cidr_blocks

security_group_id = aws_security_group.eks_cluster.id

}

# OIDC Provider for IRSA (IAM Roles for Service Accounts)

data "tls_certificate" "eks" {

url = aws_eks_cluster.main.identity[0].oidc[0].issuer

}

resource "aws_iam_openid_connect_provider" "eks" {

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [data.tls_certificate.eks.certificates[0].sha1_fingerprint]

url = aws_eks_cluster.main.identity[0].oidc[0].issuer

tags = local.tags

}

# Cluster Autoscaler IAM Role

resource "aws_iam_role" "cluster_autoscaler" {

name = "${var.environment}-eks-cluster-autoscaler"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRoleWithWebIdentity"

Effect = "Allow"

Principal = {

Federated = aws_iam_openid_connect_provider.eks.arn

}

Condition = {

StringEquals = {

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:sub" = "system:serviceaccount:kube-system:cluster-autoscaler"

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:aud" = "sts.amazonaws.com"

}

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy" "cluster_autoscaler" {

name = "${var.environment}-cluster-autoscaler-policy"

role = aws_iam_role.cluster_autoscaler.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Action = [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances",

"autoscaling:DescribeLaunchConfigurations",

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeTags",

"ec2:DescribeInstanceTypes",

"ec2:DescribeLaunchTemplateVersions"

]

Resource = "*"

},

{

Effect = "Allow"

Action = [

"autoscaling:SetDesiredCapacity",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"ec2:DescribeImages",

"ec2:GetInstanceTypesFromInstanceRequirements",

"eks:DescribeNodegroup"

]

Resource = "*"

}

]

})

}

# AWS Load Balancer Controller IAM Role

resource "aws_iam_role" "aws_load_balancer_controller" {

name = "${var.environment}-aws-load-balancer-controller"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRoleWithWebIdentity"

Effect = "Allow"

Principal = {

Federated = aws_iam_openid_connect_provider.eks.arn

}

Condition = {

StringEquals = {

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:sub" = "system:serviceaccount:kube-system:aws-load-balancer-controller"

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:aud" = "sts.amazonaws.com"

}

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy_attachment" "aws_load_balancer_controller" {

policy_arn = aws_iam_policy.aws_load_balancer_controller.arn

role = aws_iam_role.aws_load_balancer_controller.name

}

resource "aws_iam_policy" "aws_load_balancer_controller" {

name = "${var.environment}-AWSLoadBalancerControllerIAMPolicy"

description = "IAM policy for AWS Load Balancer Controller"

policy = file("${path.module}/policies/aws-load-balancer-controller-policy.json")

}

# EBS CSI Driver IAM Role

resource "aws_iam_role" "ebs_csi_driver" {

name = "${var.environment}-ebs-csi-driver"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRoleWithWebIdentity"

Effect = "Allow"

Principal = {

Federated = aws_iam_openid_connect_provider.eks.arn

}

Condition = {

StringEquals = {

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:sub" = "system:serviceaccount:kube-system:ebs-csi-controller-sa"

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:aud" = "sts.amazonaws.com"

}

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy_attachment" "ebs_csi_driver" {

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy"

role = aws_iam_role.ebs_csi_driver.name

}

# EKS Addons

resource "aws_eks_addon" "vpc_cni" {

cluster_name = aws_eks_cluster.main.name

addon_name = "vpc-cni"

addon_version = var.vpc_cni_version

resolve_conflicts = "OVERWRITE"

service_account_role_arn = aws_iam_role.eks_node.arn

tags = local.tags

}

resource "aws_eks_addon" "coredns" {

cluster_name = aws_eks_cluster.main.name

addon_name = "coredns"

addon_version = var.coredns_version

resolve_conflicts = "OVERWRITE"

tags = local.tags

depends_on = [aws_eks_node_group.main]

}

resource "aws_eks_addon" "kube_proxy" {

cluster_name = aws_eks_cluster.main.name

addon_name = "kube-proxy"

addon_version = var.kube_proxy_version

resolve_conflicts = "OVERWRITE"

tags = local.tags

}

resource "aws_eks_addon" "ebs_csi_driver" {

cluster_name = aws_eks_cluster.main.name

addon_name = "aws-ebs-csi-driver"

addon_version = var.ebs_csi_driver_version

resolve_conflicts = "OVERWRITE"

service_account_role_arn = aws_iam_role.ebs_csi_driver.arn

tags = local.tags

}

# Outputs

output "cluster_id" {

description = "EKS cluster ID"

value = aws_eks_cluster.main.id

}

output "cluster_endpoint" {

description = "Endpoint for EKS control plane"

value = aws_eks_cluster.main.endpoint

}

output "cluster_security_group_id" {

description = "Security group ID attached to the EKS cluster"

value = aws_security_group.eks_cluster.id

}

output "cluster_oidc_issuer_url" {

description = "The URL on the EKS cluster OIDC Issuer"

value = aws_eks_cluster.main.identity[0].oidc[0].issuer

}

output "cluster_certificate_authority_data" {

description = "Base64 encoded certificate data required to communicate with the cluster"

value = aws_eks_cluster.main.certificate_authority[0].data

sensitive = true

}

output "cluster_autoscaler_role_arn" {

description = "IAM role ARN for cluster autoscaler"

value = aws_iam_role.cluster_autoscaler.arn

}

output "aws_load_balancer_controller_role_arn" {

description = "IAM role ARN for AWS Load Balancer Controller"

value = aws_iam_role.aws_load_balancer_controller.arn

}

3. Terraform - EKS Helm Charts (terraform/modules/eks/helm.tf)

# terraform/modules/eks/helm.tf

# Helm provider configuration

provider "helm" {

kubernetes {

host = aws_eks_cluster.main.endpoint

cluster_ca_certificate = base64decode(aws_eks_cluster.main.certificate_authority[0].data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

args = ["eks", "get-token", "--cluster-name", aws_eks_cluster.main.name]

command = "aws"

}

}

}

provider "kubernetes" {

host = aws_eks_cluster.main.endpoint

cluster_ca_certificate = base64decode(aws_eks_cluster.main.certificate_authority[0].data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

args = ["eks", "get-token", "--cluster-name", aws_eks_cluster.main.name]

command = "aws"

}

}

# Cluster Autoscaler

resource "helm_release" "cluster_autoscaler" {

name = "cluster-autoscaler"

repository = "https://kubernetes.github.io/autoscaler"

chart = "cluster-autoscaler"

namespace = "kube-system"

version = "9.29.3"

set {

name = "autoDiscovery.clusterName"

value = aws_eks_cluster.main.name

}

set {

name = "awsRegion"

value = data.aws_region.current.name

}

set {

name = "rbac.serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = aws_iam_role.cluster_autoscaler.arn

}

set {

name = "rbac.serviceAccount.name"

value = "cluster-autoscaler"

}

depends_on = [aws_eks_node_group.main]

}

# AWS Load Balancer Controller

resource "helm_release" "aws_load_balancer_controller" {

name = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

chart = "aws-load-balancer-controller"

namespace = "kube-system"

version = "1.6.2"

set {

name = "clusterName"

value = aws_eks_cluster.main.name

}

set {

name = "serviceAccount.create"

value = "true"

}

set {

name = "serviceAccount.name"

value = "aws-load-balancer-controller"

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = aws_iam_role.aws_load_balancer_controller.arn

}

depends_on = [aws_eks_node_group.main]

}

# Metrics Server

resource "helm_release" "metrics_server" {

name = "metrics-server"

repository = "https://kubernetes-sigs.github.io/metrics-server/"

chart = "metrics-server"

namespace = "kube-system"

version = "3.11.0"

set {

name = "args[0]"

value = "--kubelet-preferred-address-types=InternalIP"

}

depends_on = [aws_eks_node_group.main]

}

# Prometheus Stack (Prometheus + Grafana + Alertmanager)

resource "helm_release" "prometheus_stack" {

name = "prometheus"

repository = "https://prometheus-community.github.io/helm-charts"

chart = "kube-prometheus-stack"

namespace = "monitoring"

version = "51.3.0"

create_namespace = true

values = [

templatefile("${path.module}/helm-values/prometheus-values.yaml", {

environment = var.environment

retention_days = var.environment == "prod" ? "30d" : "7d"

storage_class = "gp3"

storage_size = var.environment == "prod" ? "100Gi" : "50Gi"

grafana_admin_pwd = var.grafana_admin_password

})

]

depends_on = [aws_eks_node_group.main]

}

# Fluent Bit for log aggregation

resource "helm_release" "fluent_bit" {

name = "fluent-bit"

repository = "https://fluent.github.io/helm-charts"

chart = "fluent-bit"

namespace = "logging"

version = "0.39.0"

create_namespace = true

values = [

templatefile("${path.module}/helm-values/fluent-bit-values.yaml", {

aws_region = data.aws_region.current.name

cluster_name = aws_eks_cluster.main.name

log_group_name = aws_cloudwatch_log_group.application.name

})

]

depends_on = [aws_eks_node_group.main]

}

# CloudWatch Log Group for application logs

resource "aws_cloudwatch_log_group" "application" {

name = "/aws/eks/${local.cluster_name}/application"

retention_in_days = var.environment == "prod" ? 90 : 30

kms_key_id = aws_kms_key.eks.arn

tags = local.tags

}

# X-Ray Daemon

resource "kubernetes_daemonset" "xray_daemon" {

metadata {

name = "xray-daemon"

namespace = "kube-system"

}

spec {

selector {

match_labels = {

app = "xray-daemon"

}

}

template {

metadata {

labels = {

app = "xray-daemon"

}

}

spec {

service_account_name = kubernetes_service_account.xray.metadata[0].name

container {

name = "xray-daemon"

image = "amazon/aws-xray-daemon:latest"

port {

name = "xray-ingest"

container_port = 2000

protocol = "UDP"

}

port {

name = "xray-tcp"

container_port = 2000

protocol = "TCP"

}

resources {

limits = {

memory = "256Mi"

cpu = "200m"

}

requests = {

memory = "128Mi"

cpu = "100m"

}

}

}

}

}

}

depends_on = [aws_eks_node_group.main]

}

resource "kubernetes_service" "xray_daemon" {

metadata {

name = "xray-service"

namespace = "kube-system"

}

spec {

selector = {

app = "xray-daemon"

}

port {

name = "xray-ingest"

port = 2000

target_port = 2000

protocol = "UDP"

}

port {

name = "xray-tcp"

port = 2000

target_port = 2000

protocol = "TCP"

}

type = "ClusterIP"

}

}

resource "kubernetes_service_account" "xray" {

metadata {

name = "xray-daemon"

namespace = "kube-system"

annotations = {

"eks.amazonaws.com/role-arn" = aws_iam_role.xray.arn

}

}

}

resource "aws_iam_role" "xray" {

name = "${var.environment}-eks-xray-daemon"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRoleWithWebIdentity"

Effect = "Allow"

Principal = {

Federated = aws_iam_openid_connect_provider.eks.arn

}

Condition = {

StringEquals = {

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:sub" = "system:serviceaccount:kube-system:xray-daemon"

"${replace(aws_iam_openid_connect_provider.eks.url, "https://", "")}:aud" = "sts.amazonaws.com"

}

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy_attachment" "xray" {

policy_arn = "arn:aws:iam::aws:policy/AWSXRayDaemonWriteAccess"

role = aws_iam_role.xray.name

}

# OPA Gatekeeper for policy enforcement

resource "helm_release" "gatekeeper" {

name = "gatekeeper"

repository = "https://open-policy-agent.github.io/gatekeeper/charts"

chart = "gatekeeper"

namespace = "gatekeeper-system"

version = "3.14.0"

create_namespace = true

set {

name = "audit.interval"

value = "60"

}

depends_on = [aws_eks_node_group.main]

}

# Falco for runtime security

resource "helm_release" "falco" {

name = "falco"

repository = "https://falcosecurity.github.io/charts"

chart = "falco"

namespace = "falco"

version = "3.8.4"

create_namespace = true

set {

name = "falco.grpc.enabled"

value = "true"

}

set {

name = "falco.grpcOutput.enabled"

value = "true"

}

count = var.environment == "prod" ? 1 : 0

depends_on = [aws_eks_node_group.main]

}

4. Kubernetes Deployment - Backend (kubernetes/base/backend/deployment.yaml)

# kubernetes/base/backend/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend

namespace: backend

labels:

app: backend

tier: application

version: v1

spec:

replicas: 3

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

selector:

matchLabels:

app: backend

template:

metadata:

labels:

app: backend

tier: application

version: v1

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "8080"

prometheus.io/path: "/metrics"

spec:

serviceAccountName: backend-sa

securityContext:

runAsNonRoot: true

runAsUser: 1000

fsGroup: 1000

seccompProfile:

type: RuntimeDefault

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- backend

topologyKey: kubernetes.io/hostname

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/arch

operator: In

values:

- amd64

- arm64

containers:

- name: backend

image: ACCOUNT_ID.dkr.ecr.us-east-1.amazonaws.com/backend:latest

imagePullPolicy: Always

ports:

- name: http

containerPort: 8080

protocol: TCP

env:

- name: PORT

value: "8080"

- name: ENVIRONMENT

valueFrom:

configMapKeyRef:

name: backend-config

key: environment

- name: LOG_LEVEL

valueFrom:

configMapKeyRef:

name: backend-config

key: log_level

- name: DATABASE_HOST

valueFrom:

secretKeyRef:

name: backend-secrets

key: database_host

- name: DATABASE_PORT

valueFrom:

secretKeyRef:

name: backend-secrets

key: database_port

- name: DATABASE_NAME

valueFrom:

secretKeyRef:

name: backend-secrets

key: database_name

- name: DATABASE_USER

valueFrom:

secretKeyRef:

name: backend-secrets

key: database_user

- name: DATABASE_PASSWORD

valueFrom:

secretKeyRef:

name: backend-secrets

key: database_password

- name: REDIS_HOST

valueFrom:

secretKeyRef:

name: backend-secrets

key: redis_host

- name: REDIS_PORT

valueFrom:

secretKeyRef:

name: backend-secrets

key: redis_port

- name: AWS_XRAY_DAEMON_ADDRESS

value: "xray-service.kube-system:2000"

- name: AWS_XRAY_TRACING_NAME

value: "backend-service"

resources:

requests:

memory: "512Mi"

cpu: "250m"

limits:

memory: "1Gi"

cpu: "1000m"

livenessProbe:

httpGet:

path: /health/live

port: http

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

readinessProbe:

httpGet:

path: /health/ready

port: http

initialDelaySeconds: 10

periodSeconds: 5

timeoutSeconds: 3

failureThreshold: 3

startupProbe:

httpGet:

path: /health/startup

port: http

initialDelaySeconds: 0

periodSeconds: 5

timeoutSeconds: 3

failureThreshold: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

capabilities:

drop:

- ALL

volumeMounts:

- name: tmp

mountPath: /tmp

- name: cache

mountPath: /app/cache

volumes:

- name: tmp

emptyDir: {}

- name: cache

emptyDir: {}

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: backend

5. Kubernetes HPA (kubernetes/base/backend/hpa.yaml)

# kubernetes/base/backend/hpa.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: backend-hpa

namespace: backend

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: backend

minReplicas: 3

maxReplicas: 15

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

- type: Pods

pods:

metric:

name: http_requests_per_second

target:

type: AverageValue

averageValue: "1000"

behavior:

scaleDown:

stabilizationWindowSeconds: 300

policies:

- type: Percent

value: 50

periodSeconds: 60

- type: Pods

value: 2

periodSeconds: 60

selectPolicy: Min

scaleUp:

stabilizationWindowSeconds: 0

policies:

- type: Percent

value: 100

periodSeconds: 30

- type: Pods

value: 4

periodSeconds: 30

selectPolicy: Max

6. Terraform - RDS Module (terraform/modules/rds/main.tf)

# terraform/modules/rds/main.tf

locals {

db_name = "${var.environment}-postgres"

tags = merge(

var.tags,

{

Environment = var.environment

ManagedBy = "Terraform"

Component = "Database"

}

)

}

# Subnet Group

resource "aws_db_subnet_group" "main" {

name = "${var.environment}-db-subnet-group"

subnet_ids = var.private_subnet_ids

tags = merge(

local.tags,

{

Name = "${var.environment}-db-subnet-group"

}

)

}

# Security Group

resource "aws_security_group" "rds" {

name_prefix = "${var.environment}-rds-sg"

description = "Security group for RDS Aurora PostgreSQL"

vpc_id = var.vpc_id

ingress {

from_port = 5432

to_port = 5432

protocol = "tcp"

security_groups = [var.eks_security_group_id]

description = "Allow PostgreSQL from EKS"

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

description = "Allow all outbound"

}

tags = merge(

local.tags,

{

Name = "${var.environment}-rds-sg"

}

)

}

# KMS Key for encryption

resource "aws_kms_key" "rds" {

description = "KMS key for RDS encryption in ${var.environment}"

deletion_window_in_days = var.environment == "prod" ? 30 : 7

enable_key_rotation = true

tags = local.tags

}

resource "aws_kms_alias" "rds" {

name = "alias/rds-${var.environment}"

target_key_id = aws_kms_key.rds.key_id

}

# Random password for master user

resource "random_password" "master" {

length = 32

special = true

}

# Store password in Secrets Manager

resource "aws_secretsmanager_secret" "rds_master_password" {

name = "${var.environment}/rds/master-password"

description = "Master password for RDS Aurora cluster in ${var.environment}"

recovery_window_in_days = var.environment == "prod" ? 30 : 7

kms_key_id = aws_kms_key.rds.id

tags = local.tags

}

resource "aws_secretsmanager_secret_version" "rds_master_password" {

secret_id = aws_secretsmanager_secret.rds_master_password.id

secret_string = jsonencode({

username = "dbadmin"

password = random_password.master.result

engine = "postgres"

host = aws_rds_cluster.main.endpoint

port = 5432

dbname = var.database_name

})

}

# Parameter Group

resource "aws_rds_cluster_parameter_group" "main" {

name = "${var.environment}-aurora-postgres14-cluster-pg"

family = "aurora-postgresql14"

description = "Cluster parameter group for ${var.environment}"

parameter {

name = "log_statement"

value = var.environment == "prod" ? "ddl" : "all"

}

parameter {

name = "log_min_duration_statement"

value = "1000"

}

parameter {

name = "shared_preload_libraries"

value = "pg_stat_statements,auto_explain"

}

parameter {

name = "auto_explain.log_min_duration"

value = "5000"

}

parameter {

name = "rds.force_ssl"

value = "1"

}

tags = local.tags

}

resource "aws_db_parameter_group" "main" {

name = "${var.environment}-aurora-postgres14-db-pg"

family = "aurora-postgresql14"

description = "DB parameter group for ${var.environment}"

parameter {

name = "log_rotation_age"

value = "1440"

}

parameter {

name = "log_rotation_size"

value = "102400"

}

tags = local.tags

}

# Aurora Cluster

resource "aws_rds_cluster" "main" {

cluster_identifier = "${var.environment}-aurora-cluster"

engine = "aurora-postgresql"

engine_mode = "provisioned"

engine_version = var.engine_version

database_name = var.database_name

master_username = "dbadmin"

master_password = random_password.master.result

db_subnet_group_name = aws_db_subnet_group.main.name

db_cluster_parameter_group_name = aws_rds_cluster_parameter_group.main.name

vpc_security_group_ids = [aws_security_group.rds.id]

# Backup configuration

backup_retention_period = var.environment == "prod" ? 35 : 7

preferred_backup_window = "03:00-04:00"

preferred_maintenance_window = "mon:04:00-mon:05:00"

# Encryption

storage_encrypted = true

kms_key_id = aws_kms_key.rds.arn

# High availability

availability_zones = var.availability_zones

# Monitoring

enabled_cloudwatch_logs_exports = ["postgresql"]

# Deletion protection

deletion_protection = var.environment == "prod" ? true : false

skip_final_snapshot = var.environment != "prod"

final_snapshot_identifier = var.environment == "prod" ? "${var.environment}-aurora-final-snapshot-${formatdate("YYYY-MM-DD-hhmm", timestamp())}" : null

# Serverless v2 scaling

serverlessv2_scaling_configuration {

max_capacity = var.environment == "prod" ? 16.0 : 4.0

min_capacity = var.environment == "prod" ? 2.0 : 0.5

}

# Global database configuration (for prod only)

dynamic "global_cluster_identifier" {

for_each = var.environment == "prod" && var.enable_global_database ? [1] : []

content {

global_cluster_identifier = aws_rds_global_cluster.main[0].id

}

}

tags = local.tags

lifecycle {

ignore_changes = [

master_password,

final_snapshot_identifier

]

}

}

# Global Database for cross-region replication (prod only)

resource "aws_rds_global_cluster" "main" {

count = var.environment == "prod" && var.enable_global_database ? 1 : 0

global_cluster_identifier = "${var.environment}-global-cluster"

engine = "aurora-postgresql"

engine_version = var.engine_version

database_name = var.database_name

storage_encrypted = true

}

# Aurora Instances

resource "aws_rds_cluster_instance" "main" {

count = var.instance_count

identifier = "${var.environment}-aurora-instance-${count.index + 1}"

cluster_identifier = aws_rds_cluster.main.id

instance_class = "db.serverless"

engine = aws_rds_cluster.main.engine

engine_version = aws_rds_cluster.main.engine_version

db_parameter_group_name = aws_db_parameter_group.main.name

# Performance Insights

performance_insights_enabled = true

performance_insights_kms_key_id = aws_kms_key.rds.arn

performance_insights_retention_period = var.environment == "prod" ? 731 : 7

# Monitoring

monitoring_interval = 60

monitoring_role_arn = aws_iam_role.rds_monitoring.arn

# Auto minor version upgrade

auto_minor_version_upgrade = var.environment != "prod"

publicly_accessible = false

tags = merge(

local.tags,

{

Name = "${var.environment}-aurora-instance-${count.index + 1}"

}

)

}

# Enhanced Monitoring IAM Role

resource "aws_iam_role" "rds_monitoring" {

name = "${var.environment}-rds-enhanced-monitoring"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "monitoring.rds.amazonaws.com"

}

}]

})

tags = local.tags

}

resource "aws_iam_role_policy_attachment" "rds_monitoring" {

role = aws_iam_role.rds_monitoring.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AmazonRDSEnhancedMonitoringRole"

}

# CloudWatch Alarms

resource "aws_cloudwatch_metric_alarm" "database_cpu" {

alarm_name = "${var.environment}-rds-high-cpu"

comparison_operator = "GreaterThanThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/RDS"

period = "300"

statistic = "Average"

threshold = "80"

alarm_description = "This metric monitors RDS CPU utilization"

alarm_actions = var.alarm_sns_topic_arn != "" ? [var.alarm_sns_topic_arn] : []

dimensions = {

DBClusterIdentifier = aws_rds_cluster.main.cluster_identifier

}

tags = local.tags

}

resource "aws_cloudwatch_metric_alarm" "database_connections" {

alarm_name = "${var.environment}-rds-high-connections"

comparison_operator = "GreaterThanThreshold"

evaluation_periods = "2"

metric_name = "DatabaseConnections"

namespace = "AWS/RDS"

period = "300"

statistic = "Average"

threshold = var.environment == "prod" ? "500" : "100"

alarm_description = "This metric monitors RDS database connections"

alarm_actions = var.alarm_sns_topic_arn != "" ? [var.alarm_sns_topic_arn] : []

dimensions = {

DBClusterIdentifier = aws_rds_cluster.main.cluster_identifier

}

tags = local.tags

}

resource "aws_cloudwatch_metric_alarm" "database_storage" {

alarm_name = "${var.environment}-rds-low-storage"

comparison_operator = "LessThanThreshold"

evaluation_periods = "1"

metric_name = "FreeLocalStorage"

namespace = "AWS/RDS"

period = "300"

statistic = "Average"

threshold = "10737418240" # 10 GB in bytes

alarm_description = "This metric monitors RDS free storage space"

alarm_actions = var.alarm_sns_topic_arn != "" ? [var.alarm_sns_topic_arn] : []

dimensions = {

DBClusterIdentifier = aws_rds_cluster.main.cluster_identifier

}

tags = local.tags

}

# Outputs

output "cluster_endpoint" {

description = "Writer endpoint for the cluster"

value = aws_rds_cluster.main.endpoint

}

output "cluster_reader_endpoint" {

description = "Reader endpoint for the cluster"

value = aws_rds_cluster.main.reader_endpoint

}

output "cluster_id" {

description = "The RDS cluster ID"

value = aws_rds_cluster.main.id

}

output "cluster_arn" {

description = "The RDS cluster ARN"

value = aws_rds_cluster.main.arn

}

output "database_name" {

description = "Name of the database"

value = aws_rds_cluster.main.database_name

}

output "master_username" {

description = "Master username"

value = aws_rds_cluster.main.master_username

sensitive = true

}

output "security_group_id" {

description = "Security group ID for RDS"

value = aws_security_group.rds.id

}

output "secret_arn" {

description = "ARN of the Secrets Manager secret containing database credentials"

value = aws_secretsmanager_secret.rds_master_password.arn

}

7. Terraform - Production Environment with DR (terraform/environments/prod/main.tf)

# terraform/environments/prod/main.tf

terraform {

required_version = ">= 1.6.0"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "~> 2.23"

}

helm = {

source = "hashicorp/helm"

version = "~> 2.11"

}

random = {

source = "hashicorp/random"

version = "~> 3.5"

}

}

backend "s3" {

bucket = "terraform-state-prod-123456789"

key = "prod/terraform.tfstate"

region = "us-east-1"

encrypt = true

dynamodb_table = "terraform-state-lock-prod"

kms_key_id = "arn:aws:kms:us-east-1:123456789:key/xxxxx"

}

}

# Primary Region Provider (us-east-1)

provider "aws" {

region = "us-east-1"

default_tags {

tags = {

Environment = "production"

Project = "3-tier-app"

ManagedBy = "Terraform"

CostCenter = "Engineering"

}

}

}

# DR Region Provider (us-west-2)

provider "aws" {

alias = "dr"

region = "us-west-2"

default_tags {

tags = {

Environment = "production-dr"

Project = "3-tier-app"

ManagedBy = "Terraform"

CostCenter = "Engineering"

}

}

}

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

locals {

environment = "prod"

project = "3tier-app"

primary_region = "us-east-1"

dr_region = "us-west-2"

vpc_cidr = "10.0.0.0/16"

dr_vpc_cidr = "10.1.0.0/16"

azs = ["us-east-1a", "us-east-1b", "us-east-1c"]

dr_azs = ["us-west-2a", "us-west-2b", "us-west-2c"]

}

# ============================================

# PRIMARY REGION (us-east-1) - NETWORKING

# ============================================

module "networking_primary" {

source = "../../modules/networking"

environment = local.environment

vpc_cidr = local.vpc_cidr

availability_zones = local.azs

enable_nat_gateway = true

single_nat_gateway = false

enable_vpn_gateway = false

enable_flow_logs = true

tags = {

Region = "primary"

}

}

# ============================================

# PRIMARY REGION - EKS CLUSTER

# ============================================

module "eks_primary" {

source = "../../modules/eks"

environment = local.environment

vpc_id = module.networking_primary.vpc_id

private_subnet_ids = module.networking_primary.private_subnet_ids

allowed_cidr_blocks = ["10.0.0.0/8"]

kubernetes_version = "1.28"

node_instance_types = ["m6i.xlarge", "m6a.xlarge"]

node_desired_size = 10

node_min_size = 5

node_max_size = 50

vpc_cni_version = "v1.15.1-eksbuild.1"

coredns_version = "v1.10.1-eksbuild.4"

kube_proxy_version = "v1.28.2-eksbuild.2"

ebs_csi_driver_version = "v1.25.0-eksbuild.1"

grafana_admin_password = var.grafana_admin_password

tags = {

Region = "primary"

}

}

# ============================================

# PRIMARY REGION - RDS AURORA

# ============================================

module "rds_primary" {

source = "../../modules/rds"

environment = local.environment

vpc_id = module.networking_primary.vpc_id

private_subnet_ids = module.networking_primary.private_subnet_ids

eks_security_group_id = module.eks_primary.cluster_security_group_id

availability_zones = local.azs

database_name = "appdb"

engine_version = "14.9"

instance_count = 3

enable_global_database = true

alarm_sns_topic_arn = aws_sns_topic.alerts_primary.arn

tags = {

Region = "primary"

}

}

# ============================================

# PRIMARY REGION - ELASTICACHE REDIS

# ============================================

module "elasticache_primary" {

source = "../../modules/elasticache"

environment = local.environment

vpc_id = module.networking_primary.vpc_id

private_subnet_ids = module.networking_primary.private_subnet_ids

eks_security_group_id = module.eks_primary.cluster_security_group_id

node_type = "cache.r7g.xlarge"

num_cache_nodes = 3

parameter_group_family = "redis7"

engine_version = "7.0"

tags = {

Region = "primary"

}

}

# ============================================

# PRIMARY REGION - ECR

# ============================================

module "ecr_primary" {

source = "../../modules/ecr"

environment = local.environment

repositories = ["frontend", "backend", "worker"]

enable_replication = true

replication_destinations = [local.dr_region]

tags = {

Region = "primary"

}

}

# ============================================

# PRIMARY REGION - ALB

# ============================================

module "alb_primary" {

source = "../../modules/alb"

environment = local.environment

vpc_id = module.networking_primary.vpc_id

public_subnet_ids = module.networking_primary.public_subnet_ids

certificate_arn = var.acm_certificate_arn

enable_waf = true

enable_shield = true

tags = {

Region = "primary"

}

}

# ============================================

# PRIMARY REGION - MONITORING

# ============================================

module "monitoring_primary" {

source = "../../modules/monitoring"

environment = local.environment

eks_cluster_name = module.eks_primary.cluster_id

rds_cluster_id = module.rds_primary.cluster_id

elasticache_id = module.elasticache_primary.cluster_id

alb_arn_suffix = module.alb_primary.alb_arn_suffix

alarm_sns_topic_arn = aws_sns_topic.alerts_primary.arn

tags = {

Region = "primary"

}

}

# SNS Topic for Alerts

resource "aws_sns_topic" "alerts_primary" {

name = "${local.environment}-alerts-primary"

kms_master_key_id = aws_kms_key.sns_primary.id

tags = {

Region = "primary"

}

}

resource "aws_sns_topic_subscription" "alerts_email_primary" {

topic_arn = aws_sns_topic.alerts_primary.arn

protocol = "email"

endpoint = var.alert_email

}

resource "aws_kms_key" "sns_primary" {

description = "KMS key for SNS encryption in ${local.environment}"

deletion_window_in_days = 30

enable_key_rotation = true

}

# ============================================

# DR REGION (us-west-2) - NETWORKING

# ============================================

module "networking_dr" {

source = "../../modules/networking"

providers = {

aws = aws.dr

}

environment = "${local.environment}-dr"

vpc_cidr = local.dr_vpc_cidr

availability_zones = local.dr_azs

enable_nat_gateway = true

single_nat_gateway = false

enable_vpn_gateway = false

enable_flow_logs = true

tags = {

Region = "dr"

}

}

# ============================================

# DR REGION - EKS CLUSTER

# ============================================

module "eks_dr" {

source = "../../modules/eks"

providers = {

aws = aws.dr

}

environment = "${local.environment}-dr"

vpc_id = module.networking_dr.vpc_id

private_subnet_ids = module.networking_dr.private_subnet_ids

allowed_cidr_blocks = ["10.0.0.0/8"]

kubernetes_version = "1.28"

node_instance_types = ["m6i.xlarge", "m6a.xlarge"]

node_desired_size = 3 # Smaller for standby

node_min_size = 2

node_max_size = 30

vpc_cni_version = "v1.15.1-eksbuild.1"

coredns_version = "v1.10.1-eksbuild.4"

kube_proxy_version = "v1.28.2-eksbuild.2"

ebs_csi_driver_version = "v1.25.0-eksbuild.1"

grafana_admin_password = var.grafana_admin_password

tags = {

Region = "dr"

}

}

# ============================================

# DR REGION - RDS AURORA (Global Database Secondary)

# ============================================

resource "aws_rds_cluster" "dr" {

provider = aws.dr

cluster_identifier = "${local.environment}-aurora-cluster-dr"

engine = "aurora-postgresql"

engine_version = "14.9"

global_cluster_identifier = module.rds_primary.global_cluster_id

db_subnet_group_name = aws_db_subnet_group.dr.name

vpc_security_group_ids = [aws_security_group.rds_dr.id]

skip_final_snapshot = false

final_snapshot_identifier = "${local.environment}-aurora-dr-final-${formatdate("YYYY-MM-DD-hhmm", timestamp())}"

depends_on = [module.rds_primary]

tags = {

Region = "dr"

}

}

resource "aws_db_subnet_group" "dr" {

provider = aws.dr

name = "${local.environment}-db-subnet-group-dr"

subnet_ids = module.networking_dr.private_subnet_ids

tags = {

Region = "dr"

}

}

resource "aws_security_group" "rds_dr" {

provider = aws.dr

name_prefix = "${local.environment}-rds-sg-dr"

description = "Security group for RDS Aurora PostgreSQL in DR"

vpc_id = module.networking_dr.vpc_id

ingress {

from_port = 5432

to_port = 5432

protocol = "tcp"

security_groups = [module.eks_dr.cluster_security_group_id]

description = "Allow PostgreSQL from EKS DR"

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Region = "dr"

}

}

resource "aws_rds_cluster_instance" "dr" {

provider = aws.dr

count = 2

identifier = "${local.environment}-aurora-instance-dr-${count.index + 1}"

cluster_identifier = aws_rds_cluster.dr.id

instance_class = "db.serverless"

engine = aws_rds_cluster.dr.engine

engine_version = aws_rds_cluster.dr.engine_version

performance_insights_enabled = true

monitoring_interval = 60

tags = {

Region = "dr"

}

}

# ============================================

# DR REGION - ELASTICACHE REDIS

# ============================================

module "elasticache_dr" {

source = "../../modules/elasticache"

providers = {

aws = aws.dr

}

environment = "${local.environment}-dr"

vpc_id = module.networking_dr.vpc_id

private_subnet_ids = module.networking_dr.private_subnet_ids

eks_security_group_id = module.eks_dr.cluster_security_group_id

node_type = "cache.r7g.large" # Smaller for DR

num_cache_nodes = 2

parameter_group_family = "redis7"

engine_version = "7.0"

tags = {

Region = "dr"

}

}

# ============================================

# ROUTE 53 - GLOBAL DNS WITH HEALTH CHECKS

# ============================================

resource "aws_route53_zone" "main" {

name = var.domain_name

tags = {

Environment = local.environment

}

}

# Health check for primary region

resource "aws_route53_health_check" "primary" {

fqdn = module.alb_primary.alb_dns_name

port = 443

type = "HTTPS"

resource_path = "/health"

failure_threshold = "3"

request_interval = "30"

tags = {

Name = "${local.environment}-primary-health-check"

}

}

# Primary region DNS record

resource "aws_route53_record" "primary" {

zone_id = aws_route53_zone.main.zone_id

name = "app.${var.domain_name}"

type = "A"

set_identifier = "primary"

failover_routing_policy {

type = "PRIMARY"

}

health_check_id = aws_route53_health_check.primary.id

alias {

name = module.alb_primary.alb_dns_name

zone_id = module.alb_primary.alb_zone_id

evaluate_target_health = true

}

}

# DR region DNS record

resource "aws_route53_record" "dr" {

zone_id = aws_route53_zone.main.zone_id

name = "app.${var.domain_name}"

type = "A"

set_identifier = "dr"

failover_routing_policy {

type = "SECONDARY"

}

alias {

name = module.alb_dr.alb_dns_name

zone_id = module.alb_dr.alb_zone_id

evaluate_target_health = true

}

}

# ============================================

# CLOUDFRONT FOR GLOBAL CDN

# ============================================

resource "aws_cloudfront_distribution" "main" {

enabled = true

is_ipv6_enabled = true

comment = "${local.environment} CDN distribution"

default_root_object = "index.html"

price_class = "PriceClass_All"

aliases = [var.domain_name, "www.${var.domain_name}"]

origin {

domain_name = "app.${var.domain_name}"

origin_id = "ALB"

custom_origin_config {

http_port = 80

https_port = 443

origin_protocol_policy = "https-only"

origin_ssl_protocols = ["TLSv1.2"]

}

}

default_cache_behavior {

allowed_methods = ["DELETE", "GET", "HEAD", "OPTIONS", "PATCH", "POST", "PUT"]

cached_methods = ["GET", "HEAD"]

target_origin_id = "ALB"

forwarded_values {

query_string = true

headers = ["Host", "Authorization"]

cookies {

forward = "all"

}

}

viewer_protocol_policy = "redirect-to-https"

min_ttl = 0

default_ttl = 3600

max_ttl = 86400

compress = true

}

restrictions {

geo_restriction {

restriction_type = "none"

}

}

viewer_certificate {

acm_certificate_arn = var.cloudfront_certificate_arn

ssl_support_method = "sni-only"

minimum_protocol_version = "TLSv1.2_2021"

}

web_acl_id = aws_wafv2_web_acl.cloudfront.arn

tags = {

Environment = local.environment

}

}

# ============================================

# OUTPUTS

# ============================================

output "primary_eks_cluster_endpoint" {

description = "Primary EKS cluster endpoint"

value = module.eks_primary.cluster_endpoint

}

output "dr_eks_cluster_endpoint" {

description = "DR EKS cluster endpoint"

value = module.eks_dr.cluster_endpoint

}

output "primary_rds_endpoint" {

description = "Primary RDS cluster endpoint"

value = module.rds_primary.cluster_endpoint

sensitive = true

}

output "dr_rds_endpoint" {

description = "DR RDS cluster endpoint"

value = aws_rds_cluster.dr.endpoint

sensitive = true

}

output "cloudfront_domain_name" {

description = "CloudFront distribution domain name"

value = aws_cloudfront_distribution.main.domain_name

}

output "route53_nameservers" {

description = "Route53 nameservers"

value = aws_route53_zone.main.name_servers

}

8. Deployment Script (scripts/deploy-k8s.sh)

#!/bin/bash

# scripts/deploy-k8s.sh

set -e

# Colors for output

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Color

# Configuration

ENVIRONMENT=${1:-dev}

IMAGE_TAG=${2:-latest}

AWS_REGION=${AWS_REGION:-us-east-1}

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

ECR_REGISTRY="${AWS_ACCOUNT_ID}.dkr.ecr.${AWS_REGION}.amazonaws.com"

echo -e "${GREEN}========================================${NC}"

echo -e "${GREEN}Deploying to ${ENVIRONMENT

===========

} environment${NC}"

echo -e "${GREEN}Image Tag: ${IMAGE_TAG}${NC}"

echo -e "${GREEN}========================================${NC}"

# Function to print colored messages

print_info() {

echo -e "${GREEN}[INFO]${NC} $1"

}

print_warn() {

echo -e "${YELLOW}[WARN]${NC} $1"

}

print_error() {

echo -e "${RED}[ERROR]${NC} $1"

}

# Function to check if command exists

command_exists() {

command -v "$1" >/dev/null 2>&1

}

# Check required tools

print_info "Checking required tools..."

for cmd in kubectl aws docker helm; do

if ! command_exists "$cmd"; then

print_error "$cmd is not installed. Please install it first."

exit 1

fi

done

# Get EKS cluster name

CLUSTER_NAME="${ENVIRONMENT}-eks-cluster"

# Update kubeconfig

print_info "Updating kubeconfig for cluster: ${CLUSTER_NAME}"

aws eks update-kubeconfig --name "${CLUSTER_NAME}" --region "${AWS_REGION}"

# Verify cluster connection

print_info "Verifying cluster connection..."

if ! kubectl cluster-info &>/dev/null; then

print_error "Failed to connect to Kubernetes cluster"

exit 1

fi

print_info "Connected to cluster: $(kubectl config current-context)"

# Create namespaces if they don't exist

print_info "Creating namespaces..."

for ns in frontend backend monitoring logging; do

if ! kubectl get namespace "$ns" &>/dev/null; then

kubectl create namespace "$ns"

print_info "Created namespace: $ns"

else

print_info "Namespace $ns already exists"

fi

done

# Apply ConfigMaps

print_info "Applying ConfigMaps..."

kubectl apply -f kubernetes/base/backend/configmap.yaml -n backend

kubectl apply -f kubernetes/base/frontend/configmap.yaml -n frontend

# Get RDS and Redis endpoints from Terraform outputs

print_info "Fetching infrastructure endpoints..."

cd terraform/environments/${ENVIRONMENT}

RDS_ENDPOINT=$(terraform output -raw primary_rds_endpoint 2>/dev/null || echo "")

REDIS_ENDPOINT=$(terraform output -raw primary_redis_endpoint 2>/dev/null || echo "")

cd - > /dev/null

if [ -z "$RDS_ENDPOINT" ] || [ -z "$REDIS_ENDPOINT" ]; then

print_error "Failed to fetch infrastructure endpoints from Terraform"

exit 1

fi

# Create or update secrets

print_info "Creating/updating Kubernetes secrets..."

# Backend secrets

kubectl create secret generic backend-secrets \

--from-literal=database_host="${RDS_ENDPOINT}" \

--from-literal=database_port="5432" \

--from-literal=database_name="appdb" \

--from-literal=database_user="dbadmin" \

--from-literal=database_password="$(aws secretsmanager get-secret-value --secret-id ${ENVIRONMENT}/rds/master-password --query SecretString --output text | jq -r .password)" \

--from-literal=redis_host="${REDIS_ENDPOINT}" \

--from-literal=redis_port="6379" \

--namespace=backend \

--dry-run=client -o yaml | kubectl apply -f -

print_info "Secrets created/updated successfully"

# Build and push Docker images

print_info "Building and pushing Docker images..."

# Login to ECR

print_info "Logging in to ECR..."

aws ecr get-login-password --region "${AWS_REGION}" | docker login --username AWS --password-stdin "${ECR_REGISTRY}"

# Build and push backend

print_info "Building backend image..."

docker build -t backend:${IMAGE_TAG} -f docker/backend/Dockerfile .

docker tag backend:${IMAGE_TAG} ${ECR_REGISTRY}/backend:${IMAGE_TAG}

docker push ${ECR_REGISTRY}/backend:${IMAGE_TAG}

# Build and push frontend

print_info "Building frontend image..."

docker build -t frontend:${IMAGE_TAG} -f docker/frontend/Dockerfile .

docker tag frontend:${IMAGE_TAG} ${ECR_REGISTRY}/frontend:${IMAGE_TAG}

docker push ${ECR_REGISTRY}/frontend:${IMAGE_TAG}

print_info "Docker images pushed successfully"

# Update image tags in deployment manifests

print_info "Updating deployment manifests with new image tags..."

sed -i.bak "s|image: .*backend:.*|image: ${ECR_REGISTRY}/backend:${IMAGE_TAG}|g" kubernetes/base/backend/deployment.yaml

sed -i.bak "s|image: .*frontend:.*|image: ${ECR_REGISTRY}/frontend:${IMAGE_TAG}|g" kubernetes/base/frontend/deployment.yaml

# Apply RBAC

print_info "Applying RBAC configurations..."

kubectl apply -f kubernetes/base/backend/serviceaccount.yaml -n backend

kubectl apply -f kubernetes/base/frontend/serviceaccount.yaml -n frontend

# Apply deployments

print_info "Applying deployments..."

kubectl apply -f kubernetes/base/backend/deployment.yaml -n backend

kubectl apply -f kubernetes/base/frontend/deployment.yaml -n frontend

# Apply services

print_info "Applying services..."

kubectl apply -f kubernetes/base/backend/service.yaml -n backend

kubectl apply -f kubernetes/base/frontend/service.yaml -n frontend

# Apply HPA

print_info "Applying Horizontal Pod Autoscalers..."

kubectl apply -f kubernetes/base/backend/hpa.yaml -n backend

kubectl apply -f kubernetes/base/frontend/hpa.yaml -n frontend

# Apply Ingress

print_info "Applying Ingress..."

kubectl apply -f kubernetes/base/ingress.yaml

# Wait for deployments to be ready

print_info "Waiting for deployments to be ready..."

kubectl rollout status deployment/backend -n backend --timeout=5m

kubectl rollout status deployment/frontend -n frontend --timeout=5m

# Check pod status

print_info "Checking pod status..."

kubectl get pods -n backend

kubectl get pods -n frontend

# Get Ingress URL

print_info "Getting application URL..."

INGRESS_HOST=$(kubectl get ingress -n frontend -o jsonpath='{.items[0].status.loadBalancer.ingress[0].hostname}')

if [ -n "$INGRESS_HOST" ]; then

print_info "Application is available at: https://${INGRESS_HOST}"

else

print_warn "Ingress hostname not yet available. Please check later with: kubectl get ingress -n frontend"

fi

# Display service endpoints

print_info "Service endpoints:"

kubectl get svc -n backend

kubectl get svc -n frontend

# Cleanup backup files

rm -f kubernetes/base/backend/deployment.yaml.bak

rm -f kubernetes/base/frontend/deployment.yaml.bak

print_info "${GREEN}========================================${NC}"

print_info "${GREEN}Deployment completed successfully!${NC}"

print_info "${GREEN}========================================${NC}"

# Display useful commands

echo ""

print_info "Useful commands:"

echo " - View backend logs: kubectl logs -f deployment/backend -n backend"

echo " - View frontend logs: kubectl logs -f deployment/frontend -n frontend"

echo " - Scale backend: kubectl scale deployment/backend --replicas=5 -n backend"

echo " - Port forward backend: kubectl port-forward svc/backend 8080:80 -n backend"

echo " - View metrics: kubectl top pods -n backend"

echo ""

9. CI/CD Pipeline - GitHub Actions (.github/workflows/deploy.yml)

# .github/workflows/deploy.yml

name: Build and Deploy

on:

push:

branches:

- main

- develop

- 'release/**'

pull_request:

branches:

- main

- develop

env:

AWS_REGION: us-east-1

TERRAFORM_VERSION: 1.6.0

KUBECTL_VERSION: 1.28.0

jobs:

# ==========================================

# Code Quality and Security Checks

# ==========================================

code-quality:

name: Code Quality & Security

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v4

with:

fetch-depth: 0

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.11'

- name: Install dependencies

run: |

pip install pylint bandit safety pytest pytest-cov

- name: Run Pylint

run: |

pylint backend/ --exit-zero

- name: Run Bandit (Security)

run: |

bandit -r backend/ -f json -o bandit-report.json

continue-on-error: true

- name: Run Safety (Dependency Check)

run: |

safety check --json

continue-on-error: true

- name: Run Tests

run: |

pytest backend/tests/ --cov=backend --cov-report=xml

- name: Upload coverage to Codecov

uses: codecov/codecov-action@v3

with:

file: ./coverage.xml

flags: backend

- name: SonarCloud Scan

uses: SonarSource/sonarcloud-github-action@master

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

SONAR_TOKEN: ${{ secrets.SONAR_TOKEN }}

# ==========================================

# Terraform Plan

# ==========================================

terraform-plan:

name: Terraform Plan

runs-on: ubuntu-latest

needs: code-quality

strategy:

matrix:

environment: [dev, staging, prod]

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Setup Terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: ${{ env.TERRAFORM_VERSION }}

- name: Terraform Format Check

run: terraform fmt -check -recursive

working-directory: terraform/

- name: Terraform Init

run: terraform init

working-directory: terraform/environments/${{ matrix.environment }}

- name: Terraform Validate

run: terraform validate

working-directory: terraform/environments/${{ matrix.environment }}

- name: Terraform Plan

run: |

terraform plan -out=tfplan -input=false

working-directory: terraform/environments/${{ matrix.environment }}

env:

TF_VAR_environment: ${{ matrix.environment }}

- name: Upload Terraform Plan

uses: actions/upload-artifact@v3

with:

name: tfplan-${{ matrix.environment }}

path: terraform/environments/${{ matrix.environment }}/tfplan

retention-days: 5

# ==========================================

# Build Docker Images

# ==========================================

build:

name: Build Docker Images

runs-on: ubuntu-latest

needs: code-quality

outputs:

image-tag: ${{ steps.meta.outputs.tags }}

steps:

- name: Checkout code

uses: actions/checkout@v4

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v3

- name: Configure AWS credentials

uses: aws-actions/configure-aws-credentials@v4

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: ${{ env.AWS_REGION }}

- name: Login to Amazon ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v2

- name: Extract metadata

id: meta

uses: docker/metadata-action@v5

with:

images: ${{ steps.login-ecr.outputs.registry }}/backend

tags: |

type=ref,event=branch

type=ref,event=pr

type=semver,pattern={{version}}

type=semver,pattern={{major}}.{{minor}}

type=sha,prefix={{branch}}-

- name: Build and push Backend image

uses: docker/build-push-action@v5

with:

context: .

file: ./docker/backend/Dockerfile

push: true

tags: ${{ steps.meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}

cache-from: type=gha

cache-to: type=gha,mode=max

build-args: |

BUILD_DATE=${{ github.event.head_commit.timestamp }}

VCS_REF=${{ github.sha }}

- name: Build and push Frontend image

uses: docker/build-push-action@v5

with:

context: .

file: ./docker/frontend/Dockerfile

push: true

tags: ${{ steps.login-ecr.outputs.registry }}/frontend:${{ github.sha }}

cache-from: type=gha

cache-to: type=gha,mode=max

- name: Run Trivy vulnerability scanner

uses: aquasecurity/trivy-action@master

with:

image-ref: ${{ steps.login-ecr.outputs.registry }}/backend:${{ github.sha }}

format: 'sarif'

output: 'trivy-results.sarif'

- name: Upload Trivy results to GitHub Security

uses: github/codeql-action/upload-sarif@v2

with:

sarif_file: 'trivy-results.sarif'

# ==========================================

# Deploy to Development

# ==========================================

deploy-dev:

name: Deploy to Development

runs-on: ubuntu-latest

needs: [terraform-plan, build]

if: github.ref == 'refs/heads/develop'

environment:

name: development